Abstract

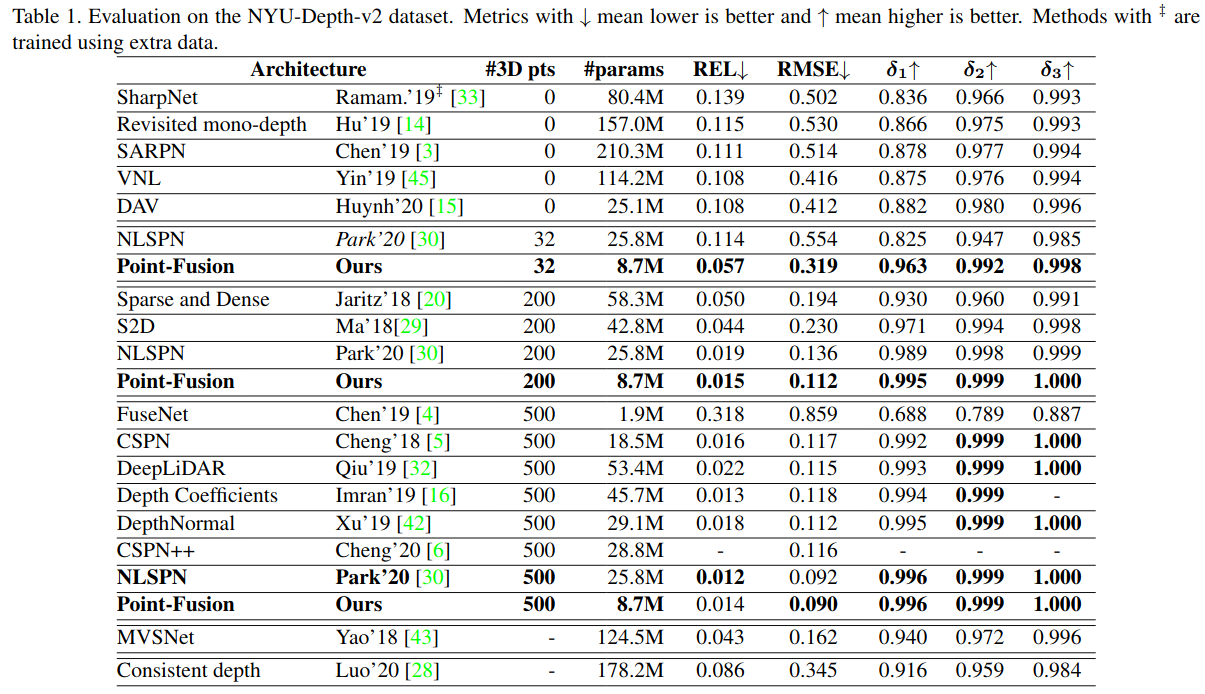

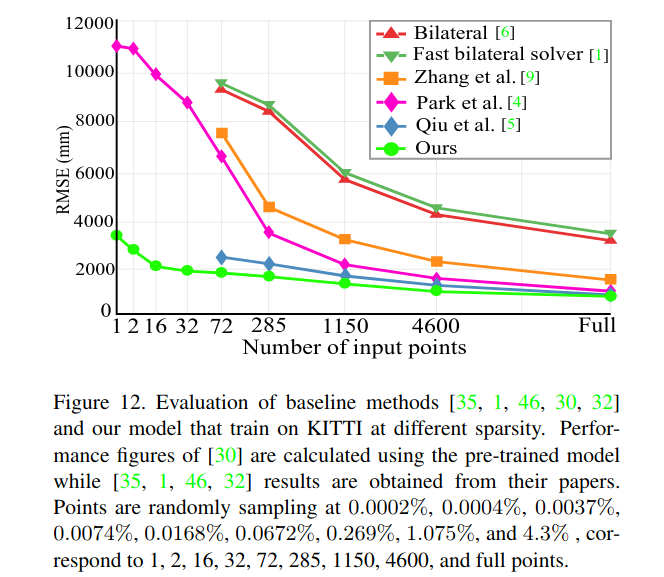

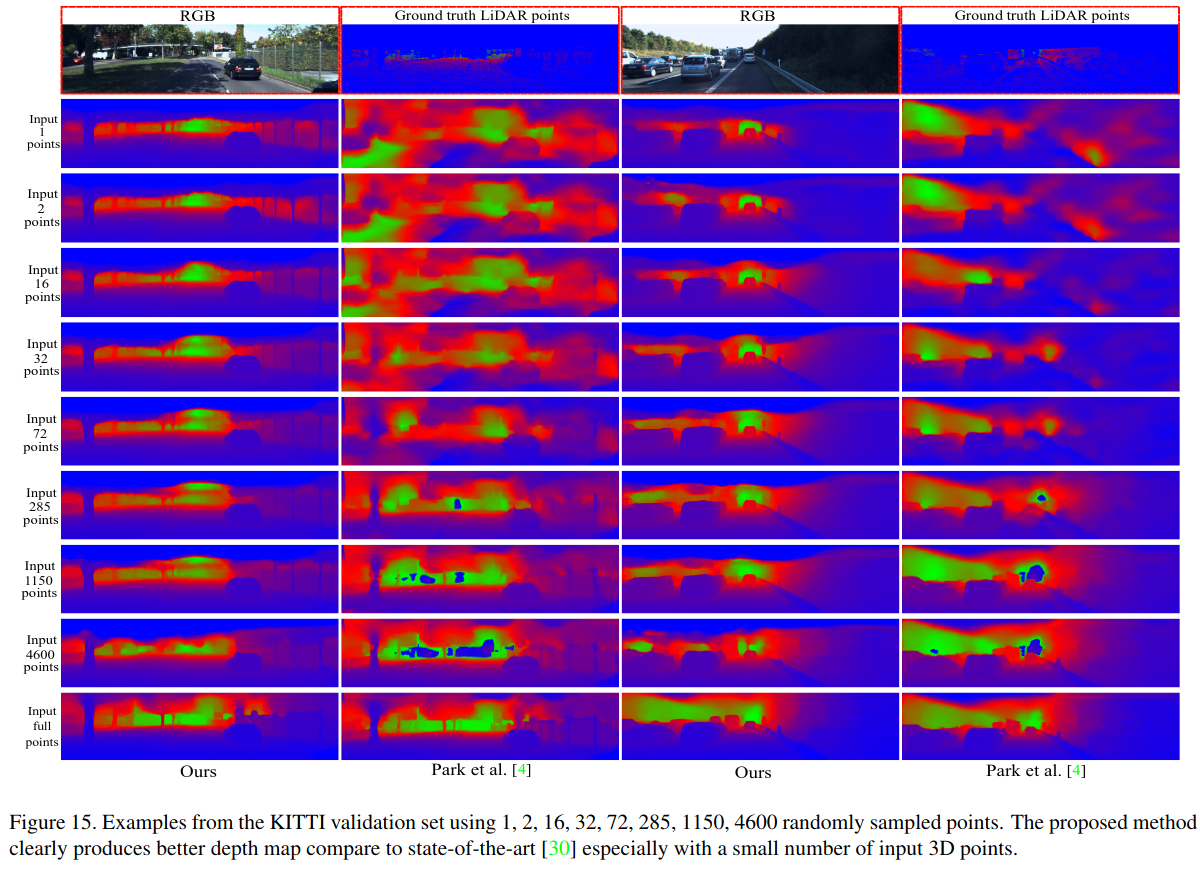

In this paper, we address the problem of fusing monocular depth estimation with a conventional multi-view stereo or SLAM to exploit the best of both worlds, that is, the accurate dense depth of the first one and lightweightness of the second one. More specifically, we use a conventional pipeline to produce a sparse 3D point cloud that is fed to a monocular depth estimation network to enhance its performance. In this way, we can achieve accuracy similar to multi-view stereo with a considerably smaller number of weights. We also show that even as few as 32 points is sufficient to outperform the best monocular depth estimation methods, and around 200 points to gain full advantage of the additional information. Moreover, we demonstrate the efficacy of our approach by integrating it with a SLAM system built-in on mobile devices.

Experiments with real-world data using sparse point cloud from ARCore

Quantitative results on NYU-v2

Quantitative results on KITTI

Qualitative results on KITTI:

Code

Coming soon.